AI is all the RAGe

As any self-respecting geek, I also jumped on the LLM bandwagon. Like almost everyone else, I spent time analysing the capabilities, pushing the boundaries, playing and learning. I guess both I and the AI I was exploring were in a "learning cycle."

And it was fun.

Until it wasn't, so I built an app to try and find the solution.

As we all know, AI today shines at command of the spoken and written language and is able to generate impressive content. It has some impressive reasoning skills, but it is simply not great at facts. And facts matter. A lot.

Intuition is a powerful tool. Probably the most powerful tool we have in our arsenal, and what we believed made us human. Even in our fiction, when we have imagined the future of advanced and powerful AIs, we always imagined a hyper-rational, fact-based mind with no feelings. What we ended up with is a machine working solely on gut feeling, with blatant disregard for cold facts, and with an extremely astute perception of our emotions.

We can, of course, try to cram more data into our models. We can train specialised models or augment models with specific knowledge. But, from what I know about the technology behind it, there will always, inevitably, be some level of randomness in the output. Not to mention the explainability of those results.

To test this, and to be able to see how much of this power it is possible to contain in a consumer-level device, I embarked on a little quest to create an AI-powered application which will curate photographs for me. Not just any set of photos. My photos.

I don't know about you, but I have hundreds of thousands of images stretching back for decades. Hundreds of thousands of moments of my life which only have meaning in my mind, as the story around them is locked inside.

What will happen if and when some of my descendants stumble upon this dusty digital chest when I'm gone? Can I create an app which will take a photo and info about me and the place and that moment, and tell a story? Maybe not as intimate as I would, but to give some context to the viewer.

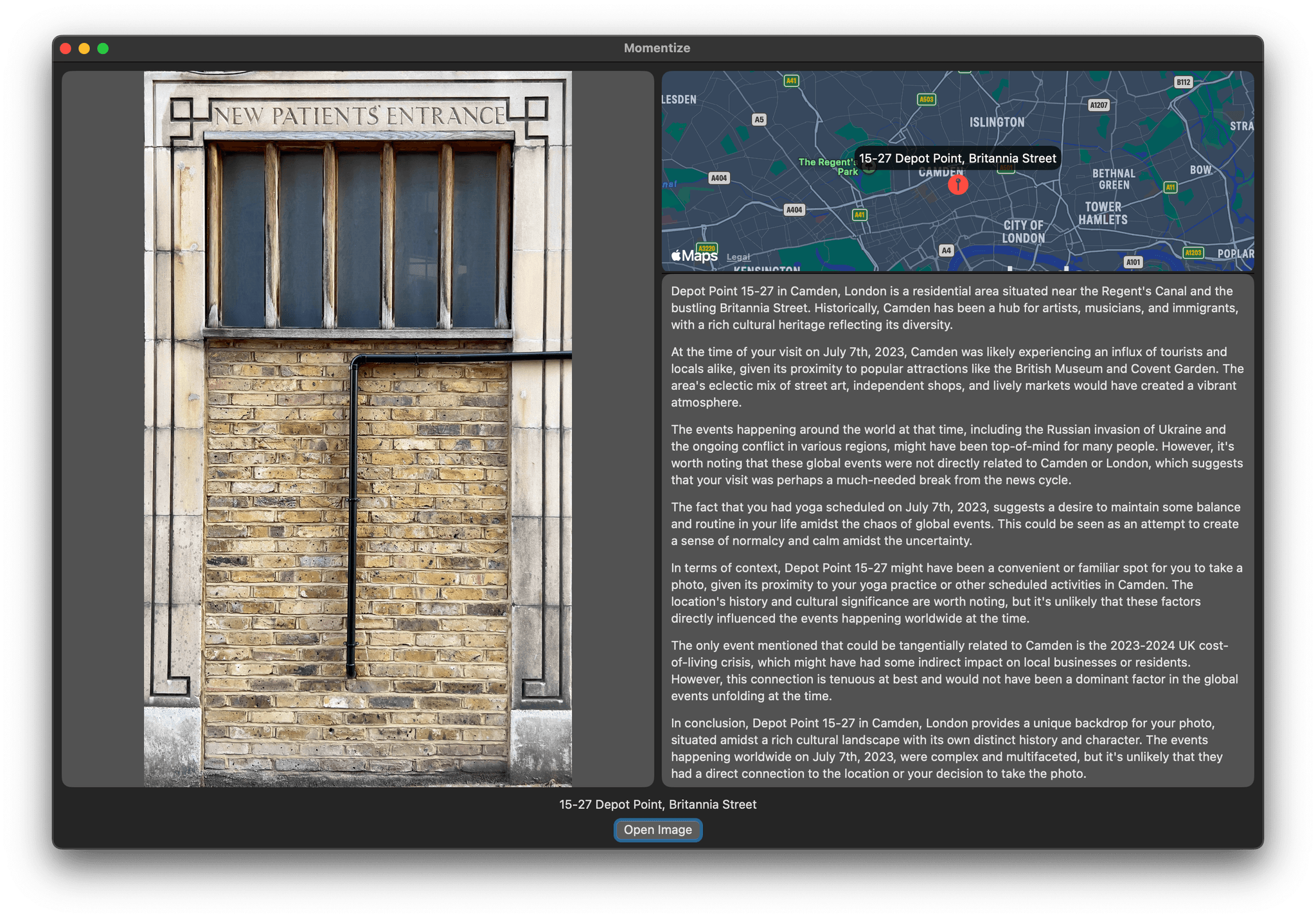

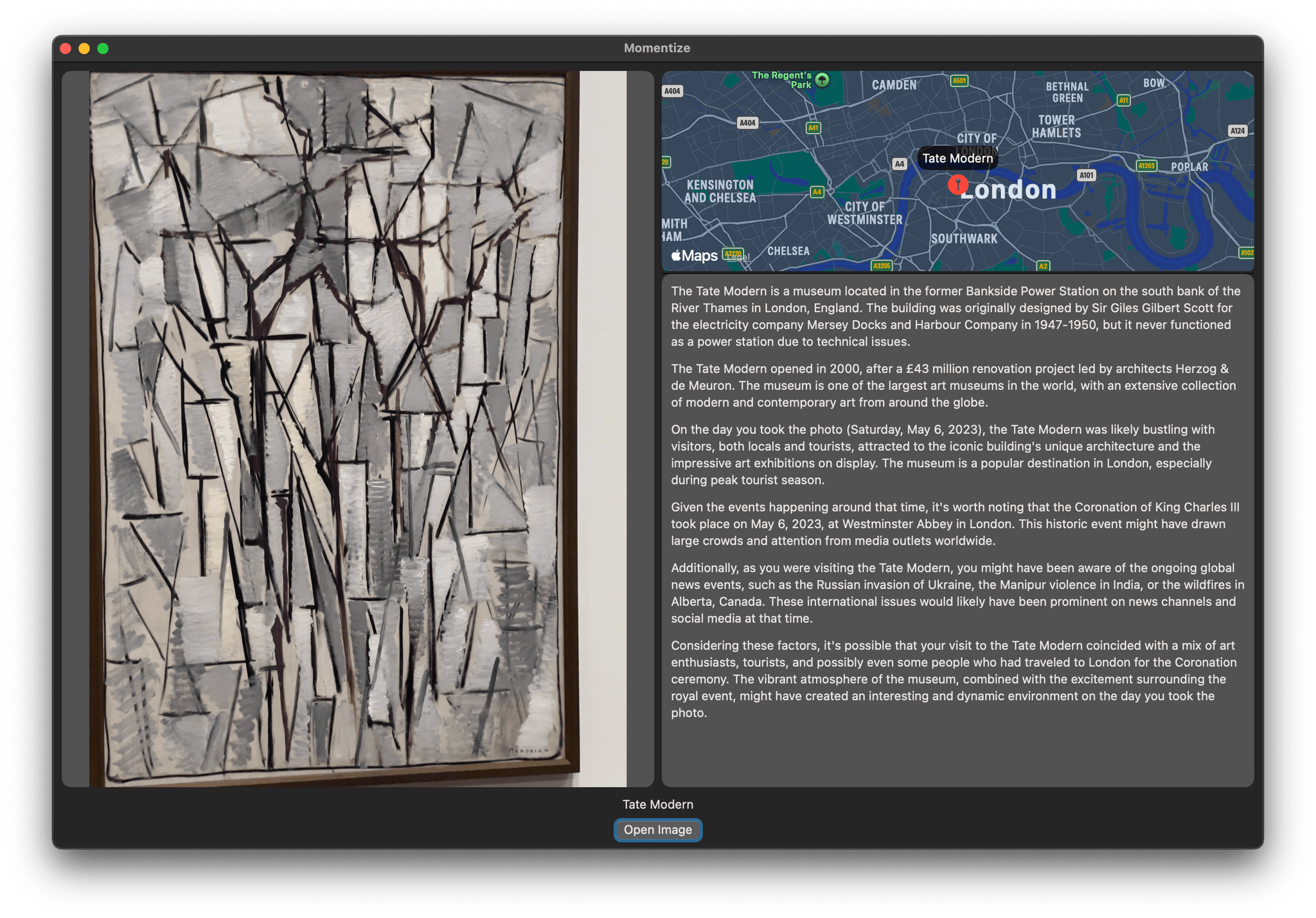

I made a macOS app in SwiftUI. It would take an image, extract metadata, and assemble a prompt like: "I need to refresh my memory. I have this photo, but I can't remember taking it. Can you give this photo some context so that I can remember the moment? I know I took it at this location on..."

I chose SwiftUI for two reasons. Firstly, I've never used it before, and secondly, it allowed me access to really sophisticated and very easy-to-use APIs like Image I/O and MapKit, making extracting metadata and mapping them a breeze.

My ultimate goal was to run everything locally, including the LLMs. I was keen to test different models as possible, and LM Studio was a brilliant tool to quickly and reliably manage multiple models.

I, of course, started with RAG. Fed emails, calendars, historical data into several off-the-shelf RAG solutions i was able to run on my machine, and was as disappointed with the facts about my life as I was about the facts the machine collated about the world. Close enough to be plausible, stated with utmost confidence, and yet often factually inaccurate. I know that this is because i mainly fed it tabular data of dates and events, but still. It was inacurate enough to be misleading.

So I changed tack. Honestly, I was spurred into this after I read about GenSQL from MIT.

Why would we want to treat this intuitive, sensitive, and creative machine we have created in any other way than our own intuitive, sensitive, and creative minds? Separate data and facts from feelings and intuitions. Let facts be facts, and use the power of intuition and emotion to color and interpret.

I tried to see how much better the output would be if I don't let the model go wild. If I provide all the information and ask it just for interpretation.

Using EventKit, it was easy to extract all the calendar info, but I still wanted to give it some more data to work with. I didn't want to spend too much time qurying complex datasets like GDELT Project, so i settled for the best thing I could find for a quick, hacky demo: The Wikipedia events page, which, of course, is not exposed through the Wikipedia API.

So I used this to test some gen AI on SwiftUI, and not too long after that, I had a very dirty but prototype-worthy class that would scrape the Wikipedia portal page and spit out nicely formatted headlines for a given date.

Then, I was able to generate a prompt which would list all the events (personal from the calendar and global from Wikipedia) and constrain the model to facts only, which has produced really decent results.

Of course, the personal information I gave the model is super sparse, and global events are almost universally dark and disturbing, which paints a bleak picture of my memories. But that is not important. What is important is that the recipe works.

The winning strategy was obtaining as much information as possible using discrete, old-fashioned technologies, and then asking the LLM to curate them for us. To explain, to guide, to transform the information from a dense, opaque source, to a form which is best suited to our individual needs and abilities. To do what LLMs excel in doing.

I am really happy to see, not only ingenious ways in which we can use genAI to extract and interrogate data with tools like GenSQL but the ability to explain, to guide, and to help us gain real insight. I am really happy to see the proliferation of tools like Google NotebookLM which are focused on interpretation and transformation, not on knowledge retention.

Because that is what real wisdom is.